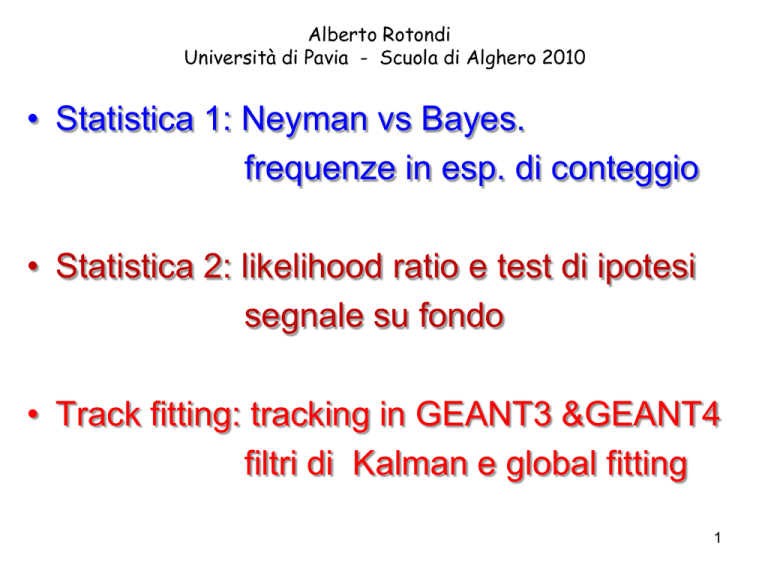

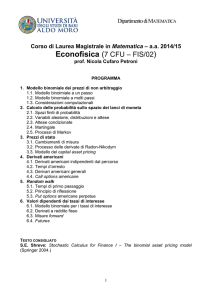

Alberto Rotondi

Università di Pavia - Scuola di Alghero 2010

• Statistica 1: Neyman vs Bayes.

frequenze in esp. di conteggio

• Statistica 2: likelihood ratio e test di ipotesi

segnale su fondo

• Track fitting: tracking in GEANT3 &GEANT4

filtri di Kalman e global fitting

1

2

2

3

4

PHYSTAT 05 - Oxford 12th - 15th September 2005

Statistical problems in Particle Physics, Astrophysics and Cosmology

5

6

7

8

Frequentist

confidence

intervals

x

9

True value

CL

x

x1

x= x1

P

< <

x

x2

Possible

interval

x= x2 < < 2

1<

<

1<

< 2) = P(x1 < x<x2) = CL

2

when x1 < x <x2

10

NEYMAN

INTEGRALS

x

Elementary statistics

may be

WRONG!!

x

11

Because P{Q} does not

contain the parameter!

12

Estimation of the sample mean

since

Due to the Central Limit theorem we have a pivot quantity

when N>>1

Hence:

13

Hence, we have three methods to find confidence

intervals with the Neyman (frequentist) technique:

• Graphical method (Neyman band)

•Analytic with the Neyman Integrals

(Clopper Pearson method)

• Inversion method (pivot variable)

x

x

14

Counting experiments

P

|x

|

[ x]

t

CL

CL is the asymptotic probability the interval will contain the true value

COVERAGE is the probability that the specific experiment does contain

the true value irrespective of what the true value is

On the infinite ensemle of experiments, for a continuous variable Coverage

and CL tend to coincide

In counting experiments the variables are discrete and CL and Coverage

do not coincide

What is requested is the minimum overcoverage

Counting experiments: Binomial case

P

|F

p|

[ p]

t

P

|F

p|

t

p(1 p)

n

CL

t=1, area 84%

Quantile =0.84

P[|f-p|<t ]= 68%

t is the quantile of the normal distribution

| f p|

p (1 p )

n

f

|t |

n

p

1

p

f

t2

2n

t

4n 2

2

t

t2

1

n Wald

t

f (1 f )

n

f (1 f )

n

t2

1

n

t

Wilson interval

(1934)

Wald (1950)

Standard in Physics

16

Counting experiments: Poisson case

Wilson interval (1934)

P

|x

|

t

CL

x

t2

2

t

t2

x

4

Wald (1950)

Standard in Physics

x

x t

x

17

Why to complicate all this?

18

Why to complicate all this?

n

1

f

t

f (1 f )

n

n=20

19

p1

p2

p

20

The 90% CL gaussian upper limit

90% area

10% area

1.28

Observed

value

Meaning I: with this upper limit, values less than the

observed one are possible with a probability <10%

Meaning II: a larger upper limit should give values less

than the observed one in less than 10% of the experiments

Meaning III: the probability to be wrong is 10%

21

22

23

The trigger problem

P(T | ) 0.95 prob. for a muon to give a trigger

P(T | ) 0.05 prob. for a pion to give a trigger

P( )

0.10

prob. to be a muon

P( )

0.90

prob. to be a pion

P( | T )

prob. that the trigger selects a pion

P( | T )

prob. that the trigger selects a muon

The probability to be a muon after the trigger P( |T):

P( | T )

P(T | ) P( )

P(T | ) P( ) P(T | ) P( )

0.95 0.10

0.95 0.10 0.05 0.90

0.678

10.000 particles

prior

9000

1.000

trigger

8550

450

trigger

950

enrichment 950/(950+450) = 68%

Efficiency (950+450)/10.000 = 14%

50

26

27

28

29

30

Bayesian credible interval

31

n 2

32

33

34

+

Bayesians

vs

Frequentists

35

Why ML does work?

hypothesis

observation

36

37

)

38

ln e

1 (x

2

)2

1 (X

2

)2

2

2 ln L( X ; )

2

( )

Gaussian variables: ML corresponds to Minimum

2

39

The weighted average

Consider the well-known weighted mean:

2

( x1

( )

)2

( x2

2

1

)2

2

2

2

,

( )

0

x1

x2

2

1

2

2

1

1

2

1

2

2

A simple algebraic manipulation gives the recursive form

(Kalman filter):

x1

x2

2

1

2

2

1

2

1

1

2

2

2

1

2

1

2

2

2

2

x1

x2

2

1

2

2

x1

2

2

2

1

x2

2

2

2

1

x1

2

1

2

1

Kalman= the measurement is weighted

point

weight following)

matrix

withmeasured

a model prediction

(track

2

2

x2

x1

prediction

40

Gaussian variables:

weighted average = Bayes (uniform) = Likelihood

41

Elementary example

20 events have been generated and 5 passed the cut

What is the estimation of the efficiency with CL=90%?

x=5, n=20, CL=90%

Frequentist result:

n

n

k x

k

x

n

k

1

1

)n

k

0.05

x

k

2

k

k 0

(1

(1

n

2)

k

0.05

1,

2

=[0.104, 0.455]

Bayesian result:

p2

x

(1

p1

1

0.90

x

0

What meaning??

)n x d

(1

)

n x

d

1,

2

=[0.122, 0.423]

42

Efficiency calculation: an OPEN PROBLEM!!

t2

2n

f

t2

f (1 f )

4n 2

n

t2

1

n

t

t2

1

n

n

1

f

n

n

k x

k

n

x

k

2

k

k 0

k

1

(1

(1

1

2

)n

)n

t

f (1 f )

n

k

/2

k

/2

p2

x

(1

)n x d

p1

1

CL ?

x

0

(1

)n x d

Wilson interval (1934)

Wald (1950)

Standard in Physics

Exact frequentist

Clopper Pearson (1934) (PDG)

Bayes.This is not frequentist

but can be tested

43

in a frequentist way

Coverage simulation

x = gRandom → Binomial(p,N)

→x

1-CL =

n

n

k x

k

x

n

k 0

k

p1k (1 p1 ) n

p2k (1 p2 ) n

k

k

/2

/2

Tmath:: BinomialI(p,N,x)

p1

p1

p2

p2

p

k++

=k/n

0ne expects ~ CL

44

Simulate many x with a true p

and check when the intervals

contain the true value p . Compare

this frequency with the stated CL

CL=0.95, n=50

45

Simulate many x with a true p and check when the intervals contain

the true value p . Compare this frequency with the stated CL

CL=0.90, n=20

46

In the estimation of the efficiency (probability)

the coverage is “chaotic”

The new standard (not yet for physicists)

is to use the exact frequentist or the formula

2

2

f

t /2

f (1 f )

t /2

t /2

f

1/ n

x

n

,

[

p

,

1

],

p

(

1

CL

)

2

1

1

4

n

n

2n

,

2

2

x 0, [0, p2 ], p2 1 (1 CL)1/ n

t /2

t /2

1

1

n

n

x / n, t / 2 gaussian,1-CL α , t 1 is 1

The standard formula

f

t

f (1 f )

n

should be abandoned

BYE-BYE

47

A further improvement:

The continuity correction is equivalent to

The Clopper-Pearson formula

2

f

t

2

t /2

f (1 f )

t /2

t /2

f

x n, [ p1 ,1], p1 (1 CL)1/ n

4n 2

n

2n

,

2

2

x 0, [0, p2 ], p2 1 (1 CL)1/ n

t /2

t /2

1

1

n

n

( x 0.5) / n, f ( x 0.5) / n,

/2

gaussian,1-CL α , t 1 is 1

This should become the standard

formula also for physicists

48

Correzione di continuità

Quando una distribuzione discreta (come la binomiale) è approssimata con una continua

(come la gaussiana), l’area del rettangolo centrato su un valore discreto x= della

variabile, che rappresenta la probabilità P( ), può essere stimata con l’area sottesa

dalla curva continua nell’intervallo [ 0.5≤x≤ +0.5]

Binomiale (istogramma a rettangoli)

Gaussiana (curva continua)

La probabilità binomiale per x= =7

è stimata mediante l’area sottesa

dalla curva normale approssimante

nell’intervallo [6.5 ≤x≤ 7.5]

6.5 7 7.5

Ciò equivale a considerare il valore discreto della variabile come

il punto medio dell’intervallo [ 0.5 , +0.5]

Se la probabilità si riferisce a una sequenza di valori discreti - cioè è richiesta la

probabilità P( 1)+P( 2)+…+ P( k) - questa si può approssimare con la probabilità

gaussiana nell’intervallo [ 1 0.5 ≤x≤ k+0.5]

Più in dettaglio, ecco come operare la correzione di continuità per diversi valori cercat

Valore della probabilità

binomiale

P(x= )

P(x≤ )

P(x< )

P(x≥ )

P(x> )

P( 1≤x≤

P( 1<x<

Intervallo su cui

stimare

la probabilità gaussiana

[

0.5≤x≤ +0.5]

[x≤ +0.5]

[x≤

[x≥

[x≥

0.5]

0.5]

0.5]

k)

[

1

0.5 ≤x≤

k+0.5]

k)

[

1

0.5 ≤x≤

k

0.5]

L’approssimazione gaussiana alla binomiale si rivela particolarmente utile per n»1

(fattoriali grandi nei coefficienti binomiali), e se si sommano le probabilità per una lun

sequenza di valori. La correzione di continuità consente di migliorare l’approssimazione

Esempio

a) Trovare la probabilità che esca Testa 23 volte in 36 lanci di una moneta

La distribuzione richiesta è una binomiale con n=36, p=q=1/2. La variabile è =23

La probabilità binomiale è:

P(

)

n!

p qn

!(n !)

36! 1

23!13! 2

23

1

2

13

3.36%

Approssimazione di Gauss con =np=36 1/2=18 e =√npq=√36 1/2 1/2=3

Tenendo conto della correzione di continuità, i due valori della variabile standardizzat

corrispondenti agli estremi dell’intervallo di integrazione [22.5, 23.5] sono:

z1

22.5 18

1.50

3

z2

23.5 18

1.83

3

Pertanto la probabilità binomiale che esca Testa 23 volte, con l’approssimazione

di Gauss, può essere stimata in questo modo:

P 1(

≤z≤1.50 )=43.32%

P 2(

≤z≤1.83 )=46.64%

P(

≤z≤1.83 )=

P 2 P 1 =3.32%

Il valore è abbastanza vicino a quello calcolato esattamente con la binomiale

Nota: per un dato valore della variabile , la probabilità con l’approssimazione

di Gauss si può anche stimare dal valore assunto dalla densità normale per x=

Il valore di probabilità di una distribuzione continua per uno specifico valore della

variabile è zero, come abbiamo visto. In questo caso però stiamo semplicemente

approssimando il valore di una distribuzione discreta (la binomiale) con il valore

assunto per quel valore dalla curva normale.

Pertanto possiamo porre:

Pbin (

) f G(x

)

1

(x ) 2 / 2

e

2

2

Nel caso dell’esempio:

Pbin (

)

1

(23 18) 2 / 2 3 2

e

3 2

0.1330 0.2494 0.0332 3.32%

che coincide con il valore trovato valutando l’area nell’intervallo [22.5, 23.5]

b) Trovare la probabilità che esca Testa almeno 23 volte in 36 lanci

Con l’approssimazione di Gauss, dobbiamo trovare la probabilità normale nell’intervallo

[22.5, ∞], che stima la probabilità binomiale che Testa esca 23 o più volte

P (z≥1.5)=50% P (

≤z <1.5)=50% 43.32%=6.68%

N=50 CL=0.90

53

N=50 CL=0.95

54

The likelihood ratio method

( p, x )

L( p, x )

L( pbest , x)

2 ln ( p, x)

Maximize

L( pbest , x)

2 ln

L ( p, x )

Minimize

55

Binomial Coverage simulation

max likelihood constraint

Feldman & Cousins, Phys. Rev. D 57(1998)3873

UNIFIED method

N!

p k (1 p) N k CL ,

k )!

k A k!( N

A k | k 0, and 2 ln ( p, N , k )

f

2 ln ( p, N , x) 2 ln

p

2 ln ( p, N , x)

1 f

x) ln

1 p

(N

56

k

n

k

N=50 CL=0.90

57

N=50 CL=0.95

58

N=20 CL=090

59

The problem persists also

with large samples!

0.95

0.90

0.86

60

N=20 CL=0.90

61

From coin tossing to physics:

the efficiency measurement

ArXiv:physics/0701199v1

Valid also for

k=0 and k=n

62

N=20 CL=0.90 Interval amplitude

likelihood

frequentist

Wilson cc

Wilson

63

x

N=20 CL=0.90 Interval

limits

64

x

(2001)

f

t2

2n

t2

1

n

t

t2

4n 2

f (1 f )

n

t2

1

n

n

1

f

n

x

k 0

x

k 0

k

n

k

f (1 f )

n

t

k

2

(1

k

2

(1

2

)n

2

)n

/2

k

k

/2

65

Counting experiments: Poisson case

(x

)

t

x

x

t2

2

x t

t2

x

4

t

x

Wilson interval (1934)

Wald (1950)

Standard in Physics

k

x

2

e

k!

k 0

2

/2

k

1

e

k!

k x

2

/2

1

e

x!

d

e

x!

d

x

1

CL ?

x

0

Exact frequentist

Clopper Pearson (1934) (PDG)

Bayes.This is not frequentist

but can be tested

66

in a frequentist way

Poissonian Coverage simulation

CL=68%

67

Poissonian Coverage simulation

CL=90%

68

The Neyman-FC integrals

Neyman integrals

q1

x

q2

p ( x; ) CL ,

k

A

A

k|k

0, and 2 ln ( , k )

L( best , x)

2 ln ( , x) 2 ln

L( , x)

2 ln ( , x)

minimize

70

Poissonian Coverage simulation

max likelihood constraint

Feldman & Cousins, Phys. Rev. D 57(1998)3873

k

k A

k!

e

CL

e / n!

2 ln

(

n

n

n e / n!

n

2 ln ( , n)

n) n ln

n

71

k

n

k

Poissonian Coverage simulation

k

k A

k!

k

e

CL A

k:

k!

n

e

n!

e

CL=68%

72

Poissonian Coverage simulation

CL=90%

73

Counting experiments: new formula for the

Poisson case

(x

)

t

x

t2

2

t

x

t2

4

x

x

0.5

Wilson interval with Continuity correction

gives the same results as …

x

x

2

k 0

x!

e

2

/2

Exact frequentist

Clopper Pearson (1934) (PDG)

x

1

k x

x!

e

1

/2

74

75

76

The Unitarity Triangle

d

s

b

u

Vud

Vus

Vub

c

Vcd

Vcs

Vcb

t

Vtd

Vts

Vtb

t

V V

• Quark mixing is described

by the CKM matrix

• Unitarity relations on matrix

elements lead to a triangle

in the complex plane

I

A=( , )

VtdVtb*

VcdVcb*

*

ud ub

*

cd cb

VV

VV

*

VudVub

*

VcdVcb

VtdVtb*

0

B=(1,0)

C=(0,0)

CP Violation

1

77

Luca Lista

Statistical Methods for Data

Analysis

78

A Bayesian application: UTFit

• UTFit: Bayesian determination of the

CKM unitarity triangle

– Many experimental and theoretical inputs

combined as product of PDF

– Resulting likelihood interpreted as Bayesian

PDF in the UT plane

• Inputs:

– Standard Model experimental measurements

and parameters

– Experimental constraints

79

Combine the constraints

• Given {xi} parameters and {ci} constraints

• Define the combined PDF

– ƒ( ρ, η, x1, x2 , ..., xN | c1, c2 , ..., cM ) ∝

∏j=1,M ƒj(cj | ρ, η, x1, x2 , ..., xN)

∏i=1,N ƒi(xi)⋅ ƒo (ρ, η)

=

A priori PDF

– PDF taken from experiments, wherever it is possible

• Determine the PDF of (ρ, η) integrating over the

remaining parameters

– ƒ(ρ, η) ∝

∫ ∏j=1,M ƒj(cj | ρ, η, x1, x2 , ..., xN)

∏i=1,N ƒi(xi)⋅ ƒo (ρ, η)

Luca Lista

Statistical Methods for Data

Analysis

=

80

Unitarity Triangle fit

68%, 95%

contours

Statistical Methods for Data

Analysis

81

PDFs for

Luca Lista

and

Statistical Methods for Data

Analysis

82

Projections on other observables

Luca Lista

Statistical Methods for Data

Analysis

83

A Frequentist application: RFit

• RFit: to choose a point in the

plane,

and ask for the best set of the

parameters for this points. The 2

values give the requested confidence

region.

• No a priori distribution of parameters

is requested

84

ln e

1 (x

2

)2

1 (x

2

)2

2

2 ln L( x; )

2

( )

85

86

87

88

Conclusions

• The

usual formulae used by physicists in counting

experimets should be abandoned

• By adopting a practical attitude, also bayesian

formulae can be tested in a frequentist way

• frequentism is the best way to give the results

of an experiment in the form

x

but other forms are also possible

• physicists should use Bayes formulae to parametrize

the previous (th or exp)

knowledge, not the ignorance

89

Quantum Mechanics:

frequentist or bayesian?

Born or Bohr?

|

2

| dx

The standard interpretation is

frequentist

90

END

91

x

Neyman integrals

Bootstrap

F ( x; ) 1 F ( ; x)

Search for pivotal variables

This method avoids the graphic procedure and

the resolution of the Neyman integrals

92

93