Multivariate Statistical Analysis

PROF. BENITO VITTORIO FROSINI

COURSE AIMS

To introduce the main techniques of multivariate statistical analysis, presented

using mainly a descriptive approach. In order to understand the subjects dealt with

fully, the first part of the course revises some matrix algebra and geometry topics.

These are then applied immediately to data matrices so that their statistical

significance is fully understood. The theory is backed up with practical exercises,

in which statistics software packages specifically designed for this kind of analysis

are used.

COURSE CONTENT

1. Overview and complements of matrix algebra and multi-dimensional geometry.

Linear spaces, lines, distances and projections in multi-dimensional spaces. The

least squares method applied to distances; lines of best fit.

2. Data, covariance and correlation matrices. Linear dependencies and rank of a

matrix. Mean and variance of a linear combination of random variables. Vector

of means and covariance matrix of a linear transformation. Multivariate

distributions; bivariate and multivariate normal distribution. Cochran's theorem.

3. Principal component analysis. Derivation of principal components using

Hotelling's method and Pearson's method. Coefficients of the components and

correlations between variables and components. Geometric aspects of principal

components. Applications of principal components.

4. Factor analysis. The canonical model for Factor Analysis. Statistical meaning

of model parameters. Structure of the covariance matrix underlying the model.

The problem of rotations. Model identification. Nonparametric techniques for

estimating model parameters, in particular Howe's method. Maximum

likelihood parameter estimation. Factor scores estimation procedures.

5. Correspondence analysis. Fields of application for correspondence analysis.

Distance between profiles and chi-squared metrics. Inertia breakdown and

individual values. Graph representations.

6. Discriminant analysis. Mahalanobis distance. Fisher's discriminant function for

two populations: parametric and nonparametric approaches. Probability of

erroneous classification and its minimization. Nonparametric approach in the

case of k populations, including the use of loss functions.

7. Cluster analysis. Distance and dissimilarity indices. In particular, the

Mahalanobis distance and its main properties. Dichotomous and polytomous

cases. Purposes of cluster analysis and main clustering techniques. Hierarchical

and non-hierarchical criteria.

8. Multidimensional scaling (MDS). Purposes of multidimensional scaling.

Classical and ordinal scaling. Relationship between classical scaling and

principal components.

9. Neural networks. Main types of artificial neural networks. Multi-layer

perceptron and radial basis function networks. Learning rules, network training

and error correction. Overfitting problem.

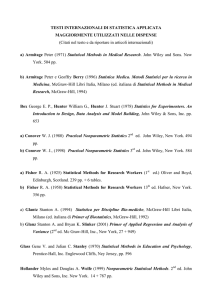

READING LIST

C. CHATFIELD-A.J. COLLINS, Introduction to Multivariate Analysis, Chapman & Hall, London.

M. FRAIRE–A. RIZZI, Analisi dei dati per il Data Mining, Carocci, Roma.

B.V. FROSINI, Connessione, regressione, correlazione, Celuc, Milan, 3rd ed.

B.V. FROSINI, Analisi di regressione, con Appendice su Vettori e Matrici, Educatt, Milan.

S. MIGNANI-A. MONTANARI, Appunti di Analisi statistica multivariata, Esculapio, Bologna.

D.F. MORRISON, Multivariate statistical methods, McGraw-Hill, New York.

S. ZANI-A. CERIOLI, Analisi dei dati e data mining per le decisioni aziendali, Giuffré, Milan.

B.V. FROSINI, Complementi di Analisi Statistica Multivariata, EDUCatt Università Cattolica, Milan.

TEACHING METHOD

Lectures and exercises in the computer laboratory.

ASSESSMENT METHOD

Written examinations.

NOTES

Further information can be found on the lecturer's webpage at

http://docenti.unicatt.it/web/searchByName.do?language=ENG or on the Faculty notice

board.